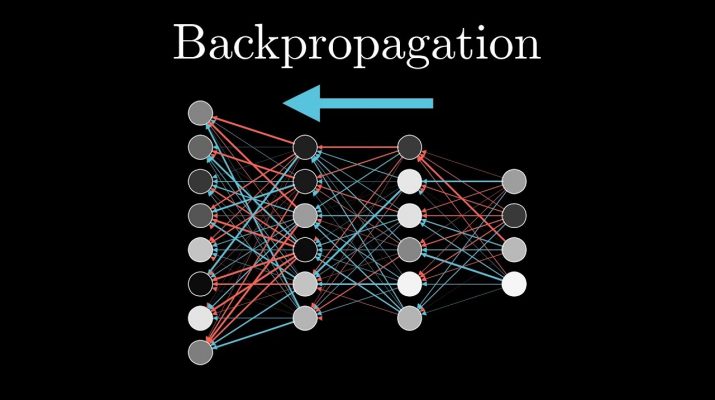

Backpropagation is a popular technique for training a neural network. In machine learning, backpropagation is a broadly utilized algorithm for training feedforward neural networks.

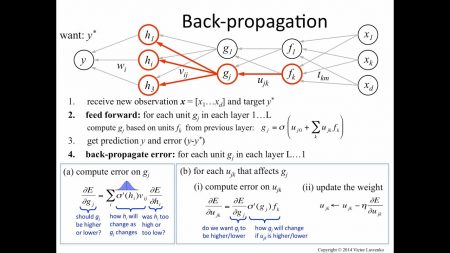

Backpropagation is a supervised learning algorithm for training Multilayer Perceptron (Artificial Neural Networks). The backpropagation algorithm looks for the minimum value of the error function in the weight domain using a technique called the delta rule or gradient descent. It is thought that the weights that minimize the error function are a solution to the learning problem.

Generalizations of backpropagation exist for other artificial neural networks and functions frequently. These classes of algorithms are all attributed to generically as “backpropagation“.

Backpropagation, short for “backward propagation of errors,” is an algorithm for supervised learning of artificial neural networks utilizing gradient descent. Given an artificial neural network and an error function, the method measures the gradient of the error function concerning the neural network’s weights. It is a generalization of the delta rule for perceptrons to multilayer feedforward neural networks.

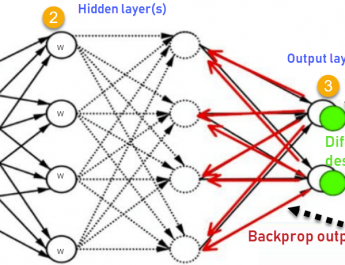

Back-propagation is the basis of neural net training. It is the practice of fine-tuning the weights of a neural net based on the error rate got in the previous epoch. Proper tuning of the weights assures lower error rates, making the model reliable by developing its generalization.

The algorithm is applied to effectively train a neural network through a method called chain rule. In simple terms, after each forward pass through a network, backpropagation produces a backward pass while adjusting the model’s parameters.

Backpropagation was one of the first methods able to show that artificial neural networks could learn good internal representations, i.e. their hidden layers learned nontrivial features. Experts investigating multilayer feedforward networks trained using backpropagation discovered that many nodes learned features similar to those created by human experts and those found by neuroscientists examining biological neural networks in mammalian brains. Even more importantly, because of the efficiency of the algorithm and the fact that domain experts were no longer asked to determine appropriate features, backpropagation permitted artificial neural networks to be applied to a much wider field of problems that were previously off-limits due to time and cost constraints.

Source: