From the Basics of Machine Learning to Understanding TensorFlow Internals with Raphael Gontijo Lopes..

Machine Learning

Data has high-level features

Some of those features are called labels

You can extract those features and labels from data using classifiers

Machine Learning is how computers learn to do this (train classifiers)

Supervised Learning is ML where you learn from labeled data

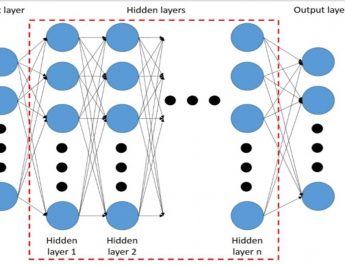

Neural Networks

Data is composed of attributes

Labels can be extracted from those attributes

Some attributes are more relevant for determining a label (so we weight it higher)

Perceptrons uses those weights to compute higher-level features

Layers of perceptrons can approximate any kind of function (including extracting labels from data) and even higher level features that themselves depend on high level features

We find optimal weights using Gradient Descent

(modifying all weights using derivative of a loss function)

Neural Network Abstractions

Whole layers of Neural Networks can be expressed with a matrix multiplication

GPUs are very fast for multiplying matrices

Whole Neural Networks can be represented by a computational graph, which perform operations over those matrices (or tensors)

TensorFlow

Define the computational graph first, then run data through it to train weights

Think about what’s going on under the hood if you run into issues

Use TF’s summary features to gain insight into your training

Practical Advice

Not learning well? Be careful with learning rates!

Divide your dataset into train and test set to see how well your NN generalizes

Get more data (!)

If you can’t get more data, fine tune