Computers can’t understand the text the way we do, but they are good at processing numerical data. So, we need a model which can convert the textual information into numerical form. Hence, Text Embeddings are building blocks for any Natural Language Processing based system.

There are mainly two ways for embedding text, either we encode each word present in the text (Word Embedding) or we encode each sentence present in the text (Sentence Embedding)

Word Embedding

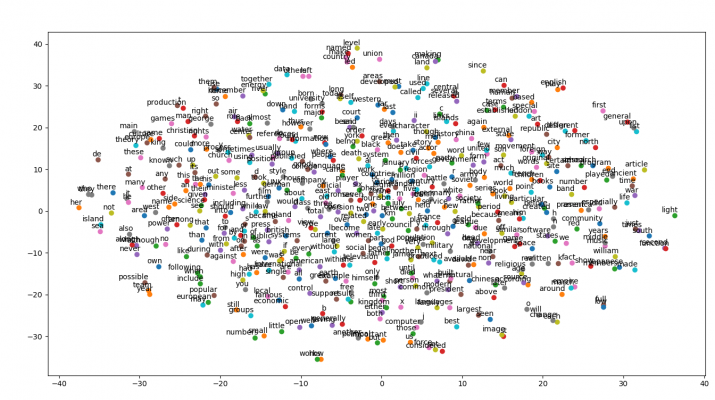

First let’s begin with word embedding, which is n-dimensional vector space representation of words such that semantically similar words (for instance, “boat” — “ship”) or semantically related words (for instance, “boat” — “water”) are closer in the vector space depending on the training data.

For capturing the semantic relatedness, the documents are used as context and while for capturing semantic similarity, the words are used as context.

The most widely used for word embedding models are word2vec and GloVe both of which are based on unsupervised learning.

Word2Vec

Word2Vec is basically a predictive embedding model. It mainly uses two types of architecture to produce vector representation of words…

https://medium.com/@kashyapkathrani/all-about-embeddings-829c8ff0bf5b