Jason Yosinski sits in a small glass box at Uber’s San Francisco, California, headquarters, pondering the mind of an artificial intelligence. An Uber research scientist, Yosinski is performing a kind of brain surgery on the AI running on his laptop. Like many of the AIs that will soon be powering so much of modern life, including self-driving Uber cars, Yosinski’s program is a deep neural network, with an architecture loosely inspired by the brain. And like the brain, the program is hard to understand from the outside: It’s a black box.

This particular AI has been trained, using a vast sum of labeled images, to recognize objects as random as zebras, fire trucks, and seat belts. Could it recognize Yosinski and the reporter hovering in front of the webcam? Yosinski zooms in on one of the AI’s individual computational nodes—the neurons, so to speak—to see what is prompting its response. Two ghostly white ovals pop up and float on the screen. This neuron, it seems, has learned to detect the outlines of faces. “This responds to your face and my face,” he says. “It responds to different size faces, different color faces.”

No one trained this network to identify faces. Humans weren’t labeled in its training images. Yet learn faces it did, perhaps as a way to recognize the things that tend to accompany them, such as ties and cowboy hats. The network is too complex for humans to comprehend its exact decisions. Yosinski’s probe had illuminated one small part of it, but overall, it remained opaque. “We build amazing models,” he says. “But we don’t quite understand them. And every year, this gap is going to get a bit larger.” READ FULL STORY

http://www.sciencemag.org/news/

Paul Voosen, Science Reporter:

Say anytime you’re talking to your phone–that’s pretty much a neural net doing that. It does really well on image recognition, autonomous cars coming soon, the top flight method for genetic sequencing is a neural network.

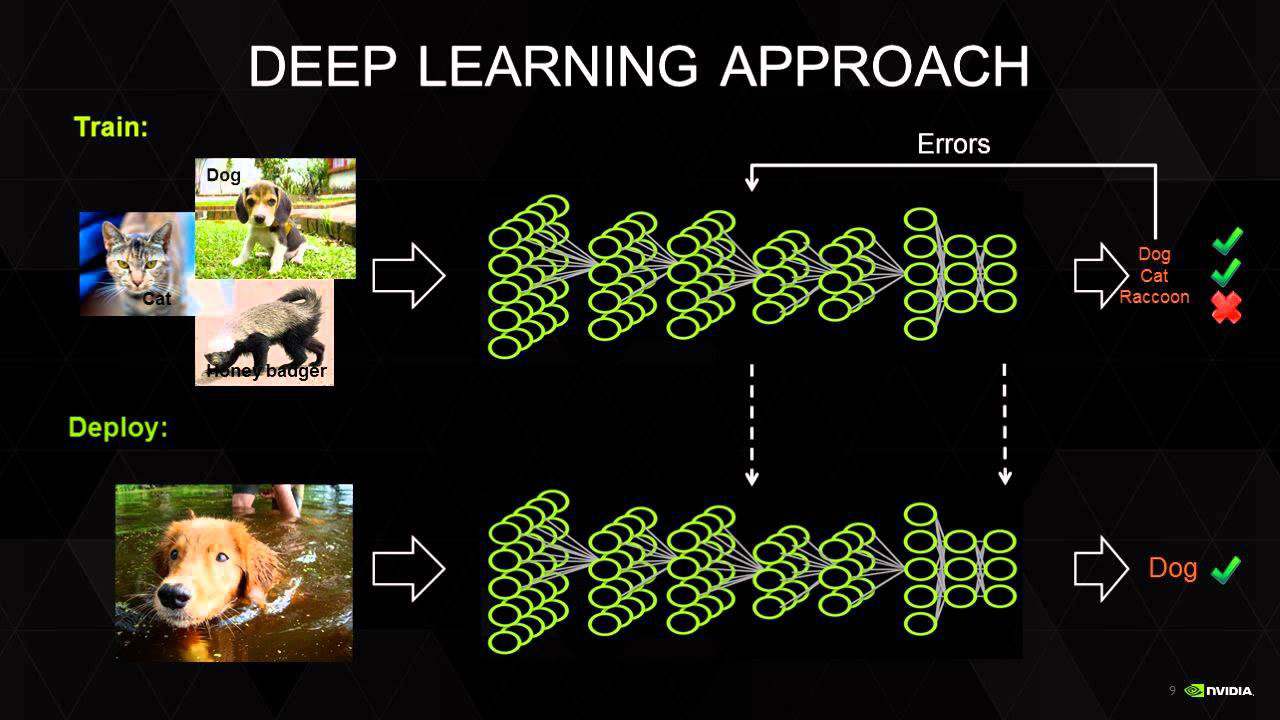

You can say they’re loosely inspired by the brain. It is a network of neurons, people call it neurons. They’re all connected to each other going down through the layers.

In a way they mimic neurons in that they’re little triggers. Each neuron has kind of a threshold– called a weight where it makes a decision. So it takes in data, it could be huge amount images. And then once the network is trained– You can put one image in at the front. They fire when they see the thing that they want to see. So you get this kind of cascade moving through the neural network. And that does really poorly. it just does horribly.

But there’s this little magic trick called back propagation and this is very unbiological– Your neurons don’t do this at all. But at the end you’re like: “OK, well you were wrong. But here’s a proper picture of a dog.” And now you send all that information back through the network again and the network learns just a little bit better at how to say: “Oh this is a dog.”

It’s elegant math and really the breakthroughs in the field were when they stopped trying to be so biological in the 1980’s. At the time they didn’t work that great but it turned out all they needed was a huge amount of processing power and a huge amount of examples and they would really sing.

So in many of these cases accuracy is enough but when you’re talking really life and death decisions– driving autonomous cars, making medical diagnoses, or pushing the frontiers of science to understand why a discovery was made– you really need to know what the AI is thinking. Not just what its results are.

Since you have all these layers it just engages in such complex decision-making that it just is essentially a black box.

We don’t really understand how they think. All is not lost with this black box problem. People are actually trying to solve this now through a variety of different ways.

One researcher has created a toolkit to get at the activation of individual neurons in a neural network. This toolkit works by taking this individual neuron and telling the network to go back and find all the weights that really make that neuron just fire like crazy. Keep doing this thousands of times and you can see the kind of perfect input for this neuron.

Then looking inside these layers he could see that some neurons learn these really complex, abstract ideas like a face detector– something that can detect any human face, no matter what it looks like. This is not a property you would expect an individual neuron to have. Most don’t, but some do.

Many neural net researchers think of it this way: You can think of the decision making of a neural net as this kind of terrain of valleys and peaks and the ball represents this piece of data. So you can understand this one decision that was made in one valley, but all those other valleys you have no idea what’s going on.

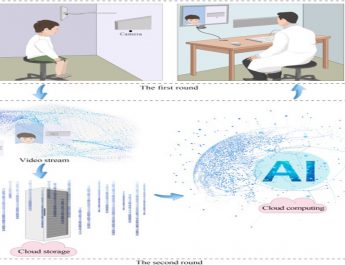

So one way to get at what an AI is thinking is to find a proxy for what it’s thinking. So one professor has taken the video game Frogger and trained an AI to play the game extremely well– because that’s fairly easy to do now. But, you know, what is it deciding to do? It’s really hard to know especially in a sequence of decisions and a dynamic environment of that. So rather than trying to get the AI itself to explain itself He asked people to play the video game and have them say what they were doing as they were playing it and recorded the state of the frog at the same time.

And then he found a way to use neural networks to translate those two languages: the code of the game and what they were saying And then he imported that back to the game playing network. So then the network was armed with these human insights. So, as the frog is waiting for a car to go by It’ll say: “Oh, I’m waiting for a hole to open up before I go.” Or it could get stuck on the side of the screen and it would say: “Uh geez, this is really hard and curse.

So it’s kind of lashing human decision-making on to this network with more deep networks.

It’s all about trust. If you have a result and you don’t understand why it made that decision– how can you really advance in your research? You really need to know that there’s not some spurious detail that’s throwing things all off.

But these models are just getting larger and more complex and I don’t think anyone thinks they’ll get to a global understanding of what a neural network is thinking anytime soon. But if we can get a sliver of this understanding– science can really push forward and these neural networks can really play.